Intro

Every time we start a new web project there is always the need to setup all the necessary tools. Some of these tools are:

- Web sever (serve requests from client)

- Programming Language (to handle the logic of the application)

- Database (to store data)

In this case I am going to be focus in a web app using Nginx as my server, PHP as my back-end programming language and MySQL as my database.

Here comes the questions:

- Is it better to have the web server and database in my personal computer as a service running?

- How can I keep separated the different versions of PHP configurations from others projects?

- How can I have separated DB running for this project and other projects?

- How can other developer (or tester) run the same environment as I have with out going through all the process of installation?

Well, lets try to solve all that by using docker. But what is that?

"Docker containers wrap a piece of software in a complete filesystem that contains everything needed to run... This guarantees that the software will always run the same, regardless of its environment."

So basically is have containers of the tools you'll need. These containers can be updated or modified individually and the union of all them is your working environment. To have a more general idea please read docker documentation.

so this is the goal of today:

- Have per project a different configuration of docker settings.

Show me goods

Requirements:

Create a folder that will hold all ours projects. I'll call this "Code".

~: mkdir Code

Create the first project called "Mouse" and lets jump in it.

~: mkdir Code/Mouse

~: cd Code/Mouse

Create a file called "docker-compose.yaml". This file will have the configuration to handle all the containers in this specific project.

~/Code/Mouse: touch docker-compose.yaml

Open the file and add these lines and save the file.

nginx:

image: nginx:latest

ports:

- 80:80

Now lets run this

~/Code/Mouse: docker-compose up -d

So whats going to happen is that docker-compose will download the latest version of the container nginx and will open to our port 80.

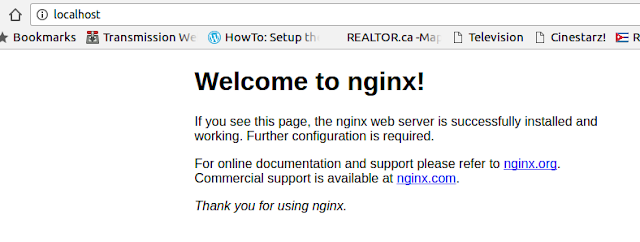

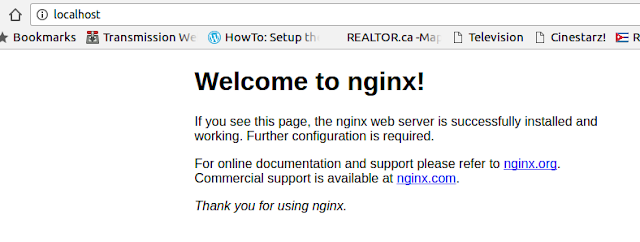

Now in your browser to http://localhost and lets see Nginx up and running.

Lets continue to add PHP to this docker-compose configuration. First we are going to create two separated folders to have specific configurations to ours containers. This happens because we need to communicate Nginx with PHP and for that we will create custom containers based on the downloaded containers.

To be safe lets stop the container

~/Code/Mouse: docker-compose down

First we will create a folder called "nginx" and in here we will create 2 files. One will have a nginx configuration (default.conf) and the other the configuration to our custom nginx container (Dockerfile)~/Code/Mouse: mkdir nginx

~/Code/Mouse: cd nginx~/Code/Mouse/nginx: touch default.conf

~/Code/Mouse/nginx: touch Dockerfile

Now add this code to default.conf

server {

listen 80 default_server;

root /var/www/html;

index index.html index.php;

charset utf-8;

location / {

try_files $uri $uri/ /index.php?$query_string;

}

location = /favicon.ico { access_log off; log_not_found off; }

location = /robots.txt { access_log off; log_not_found off; }

access_log off;

error_log /var/log/nginx/error.log error;

sendfile off;

client_max_body_size 100m;

location ~ \.php$ {

fastcgi_split_path_info ^(.+\.php)(/.+)$;

fastcgi_pass php:9000;

fastcgi_index index.php;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_intercept_errors off;

fastcgi_buffer_size 16k;

fastcgi_buffers 4 16k;

}

location ~ /\.ht {

deny all;

}

}

and this to Dockerfile

FROM nginx:latest

COPY ./default.conf /etc/nginx/conf.d/default.conf

Ok, lets create the folder for PHP

~/Code/Mouse/nginx: cd ..

~/Code/Mouse: mkdir php

~/Code/Mouse: cd php

~/Code/Mouse/php: touch Dockerfile

Lets edit that php Dockerfile

FROM php:7.0-fpm

Ok, lets get back to our docker-compose.yaml and lets reflect the changes:

nginx:

build: ./nginx/

ports:

- 80:80

links:

- php

volumes:

- .:/var/www/html

php:

build: ./php/

expose:

- 9000

volumes:

- .:/var/www/html

Notice links: in the configuration will tell that nginx needs to link with php container

We have to create the new custom containers and for that lets do

~/Code/Mouse/php: cd ..

~/Code/Mouse/php: docker-compose build

Remember this: you only will need to run docker-compose build if you make changes in the Dockerfile.

Notice that because we already downloaded the container of nginx the custom nginx doesn't to download that again. Lets see how the output should look like:

Ok lets run it

~/Code/Mouse: docker-compose up -d

and go to http://localhost. Oh my! a big 403 error.

So what happened is that we set in the docker-compose.yaml a new setting called volumes. This maps a folder in our local computer in the container, in this case

.:/var/www/html

we mapped the folder where the project is (.) to the document root

in the container /var/www/html. Take a look in nginx/default.conf de declared that the document root will be in /var/www/html.

So lets add a index.php in our Mouse folder

~/Code/Mouse: touch index.php

with content

<?php

phpinfo();

and ...

We have repeated in two places the mount point in /var/www/html. Lets do this in only one place. Edit docker-compose.yaml:

nginx:

build: ./nginx/

ports:

- 80:80

links:

- php

volumes_from:

- app

php:

build: ./php/

expose:

- 9000

volumes_from:

- app

app:

image: php:7.0-fpm

volumes:

- .:/var/www/html

command: "true"

Stop the containers and restart them

~/Code/Mouse: docker-compose down

~/Code/Mouse: docker-compose up -d

This new configuration will tell nginx and php that our volumes will come from app container. Now because this container is from php:7.0-fpm, nothing from the web will be downloaded and also the last part command: "true" tells that no command or process will be running in this container (is more like a reference container). Containers only will take memory and cpu if they have a command internally that runs without stopping. If we do

~/Code/Mouse: docker ps

we can see that the command that is running nginx container does not end, and the same for php-fpm. But we dont see our php with command "true" unless we do

~/Code/Mouse: docker ps -a

and this will show up as an ended process.

Add the data!

Lets add mySQL, but first lets prepare PHP to handle databases connections in the Dockerfile:

FROM php:7.0-fpm

RUN docker-php-ext-install pdo_mysql

notice the last line we added in the Dockerfile in php folder. Now because of this change we need to update the custom container

~/Code/Mouse/php: docker-compose down

~/Code/Mouse/php: docker-compose build

Let's create a folder to store the database files

~/Code/Mouse: mkdir data

Edit docker-compose.yaml

nginx:

build: ./nginx/

ports:

- 80:80

links:

- php

volumes_from:

- app

php:

build: ./php/

links:

- mysql

expose:

- 9000

volumes_from:

- app

app:

image: php:7.0-fpm

volumes:

- ./data:/var/www/html

command: "true"

mysql:

image: mysql:latest

volumes_from:

- data

environment:

MYSQL_ROOT_PASSWORD: secret

MYSQL_DATABASE: project

MYSQL_USER: project

MYSQL_PASSWORD: project

data:

image: mysql:latest

volumes:

- ./data:/var/lib/mysql

command: "true"

Now

~/Code/Mouse/php: docker-compose up -d

Some things to notice here:

- as php is linked to ngix, now php is linked to mysql.

- mysql container have some environment variables that we can use to configure the database.

- A container data holds the path where the DB. You can see where is located by doing `docker inspect <CONTAINER_ID>`. The container_id is available when you do `docker ps -a`.

In this case the container for mysql that ended is the one that has container id ac4ec0d2a45e. We can use command to inspect all the configuarions in a container:

~/Code/Mouse: docker inspect ac4ec0d2a45e

From here you can see the ip, volumes and other info of the container.

How can I see my data?

Lets get a session in the db container. So the mysql container that is running is 0ce29142d832 lets do:

docker exec -ti 0ce29142d832 /bin/bash

and we are in the container. From here we connect to mySQL by `mysql -uroot -psecrect`. Notice that these credential of authentication where defined in docker-compose.yaml in the mysql section.

Stoping containers and starting them

So far we have see to start and stop the containers by:

docker-compose up -d (create and start containers in background)

docker-compse down (stop and remove containers. Warning, this command deletes all info in the container.)

but we have also

docker-compose start (start containers)

docker-compse stop (stop containers without remove them)

My recomendation is that once you have your containers created use the stop and start command. For more info look into compose reference.

Where is my database?

You remember in our configuration in "docker-compose.yaml" that the database lives inside our project folder inside "data" folder. Why we did this? So the answer is simple, if we don't specify a volume for our database it can be removed when we execute command "docker-compose down".

Conclusion

As you could see we defined inside a project called `Mouse` all the required tools that we are going to need. I advice that :

- For a specific php or mysql version, search for other php images in docker repository

- Nginx default.conf can be changed the root folder to serve a specific folder in your project and not the project directory like " root /var/www/html/public", like some php frameworks do.

Thanks to